A serverless URL shortener - part 2

25/11/2022

Following on from my previous post, I had a cloud infrastructure spun up for my serverless URL shortener POC running in Azure Static Web apps, Azure Functions, storage and Front Door that was functionally complete. The next part was to validate that I'd met the non-functional requirements around the desired request throughput, and to find out how cost-effective this solution is.

Load Testing

I wanted to use the relatively new Azure Load Testing service for the testing, which I hadn't used before. It uses Apache JMeter scripts run in the cloud as it's approach. So first, I needed to set up a test script to be run. The script needed to be relatively representative of an actual load. I decided I would test an overall flow of 40 req/s split as:

- 95% to the URL mapping function, simulating a normal user getting good responses

- 5% to the admin API, targeting an URL that wasn't present (this would simulate bad responses that hit the database to find a non-existent url and would also simulate admin use).

I switched off the cache headers in Static Web Apps so I could test the non-cached performance (ie every request will hit API and storage).

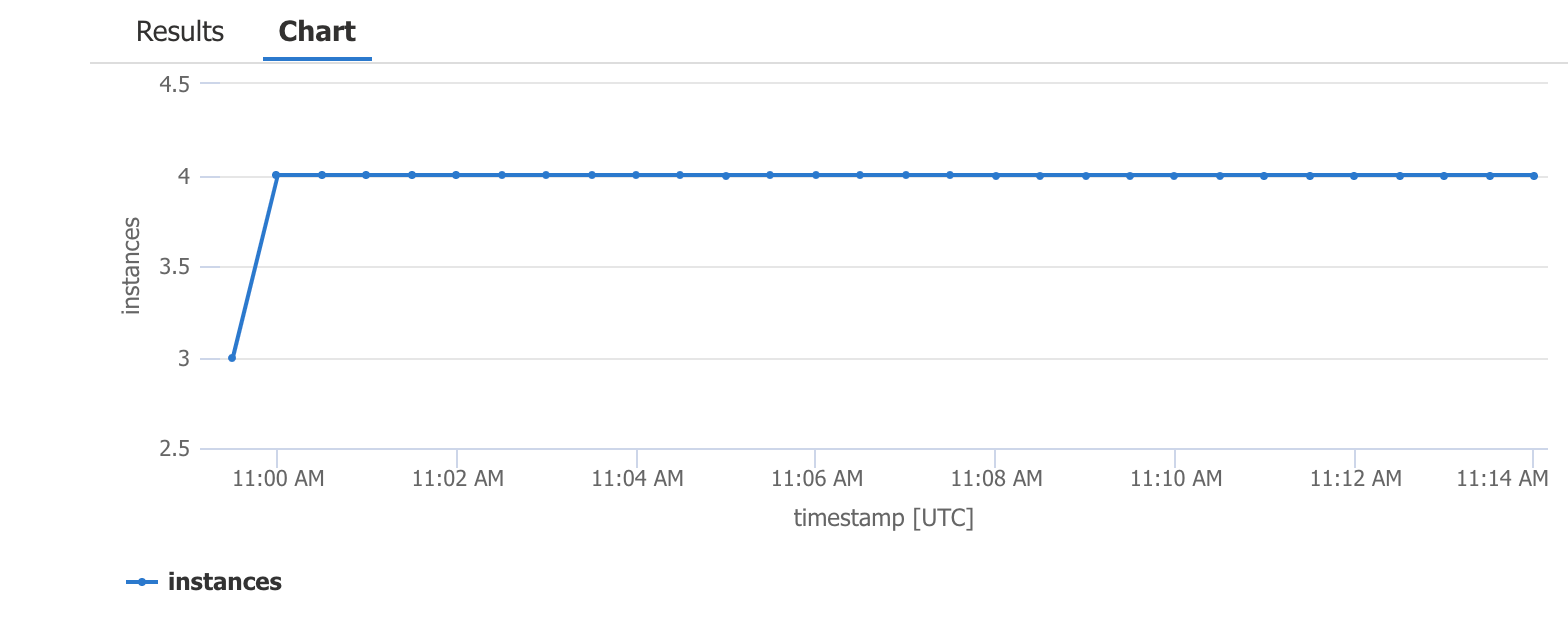

I then ran the JMeter test and looked at log analytics to see the number of instances that the web app scaled up to, which was 4 within a minute of the test starting. The majority of the requests were in the 150 - 200ms range, but there were several of multiple seconds, due to the serverless cold start.

I ran the costs through the Azure calculator. Based on the Static Web app, Functions cost and the storage costs, the worst case cost for the solution, running at this load continuously would be:

| Azure SKU | Cost |

|---|---|

| Static Web App | £7.77 |

| Azure Functions | £133.84 |

| Bandwidth | £17.27 |

| Storage | £3.15 |

| Total | £162.03 |

As you can see, the compute is the largest component of the cost. Still, for a 100 million hits per month, it isn't bad at all.

Caching all the things

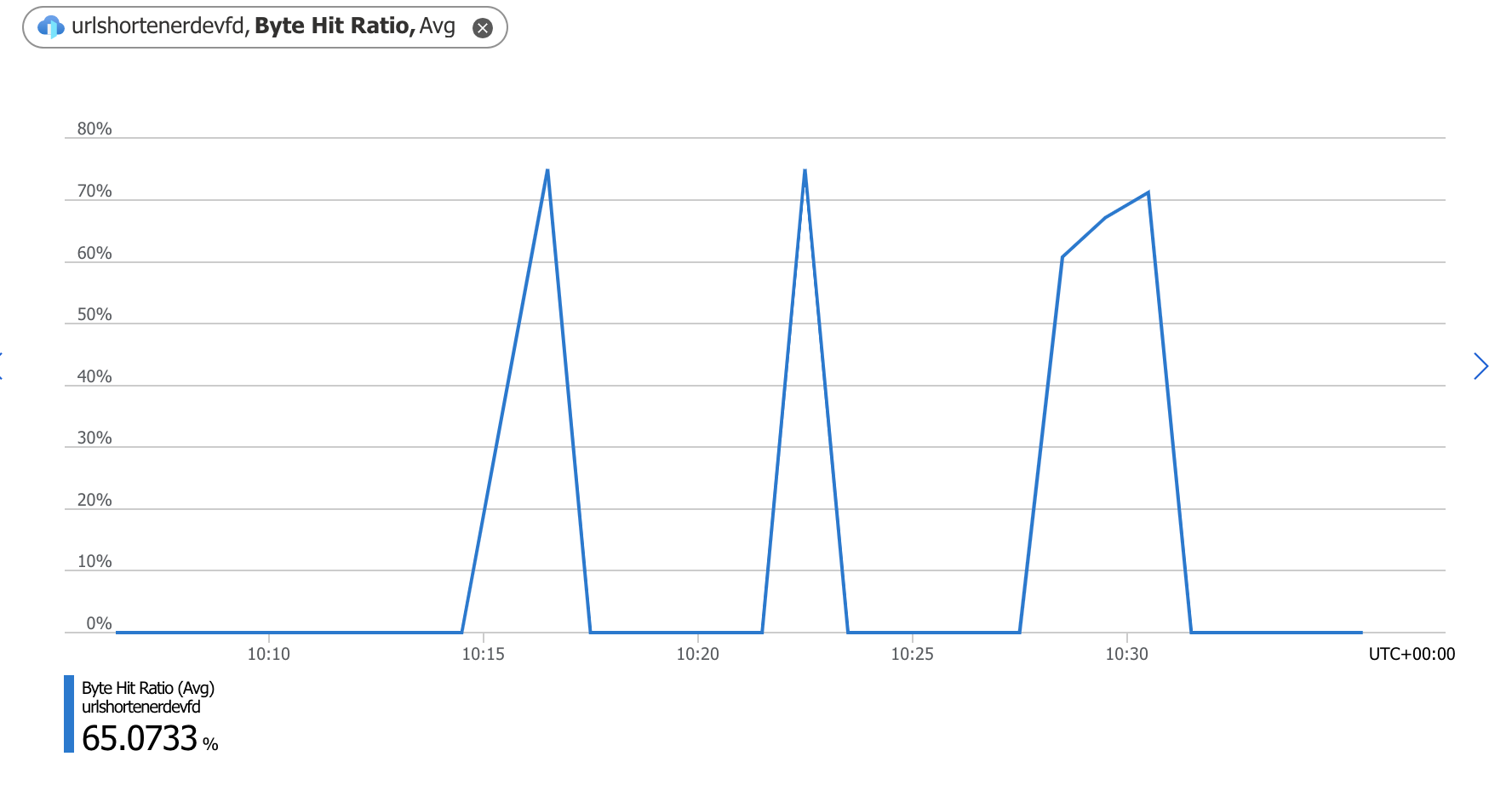

I then changed the static web app config so that the endpoint that did the URL shortening was cached. In theory, this meant that 95% of the traffic will be cached by Azure Front Door. I reran the load-test.

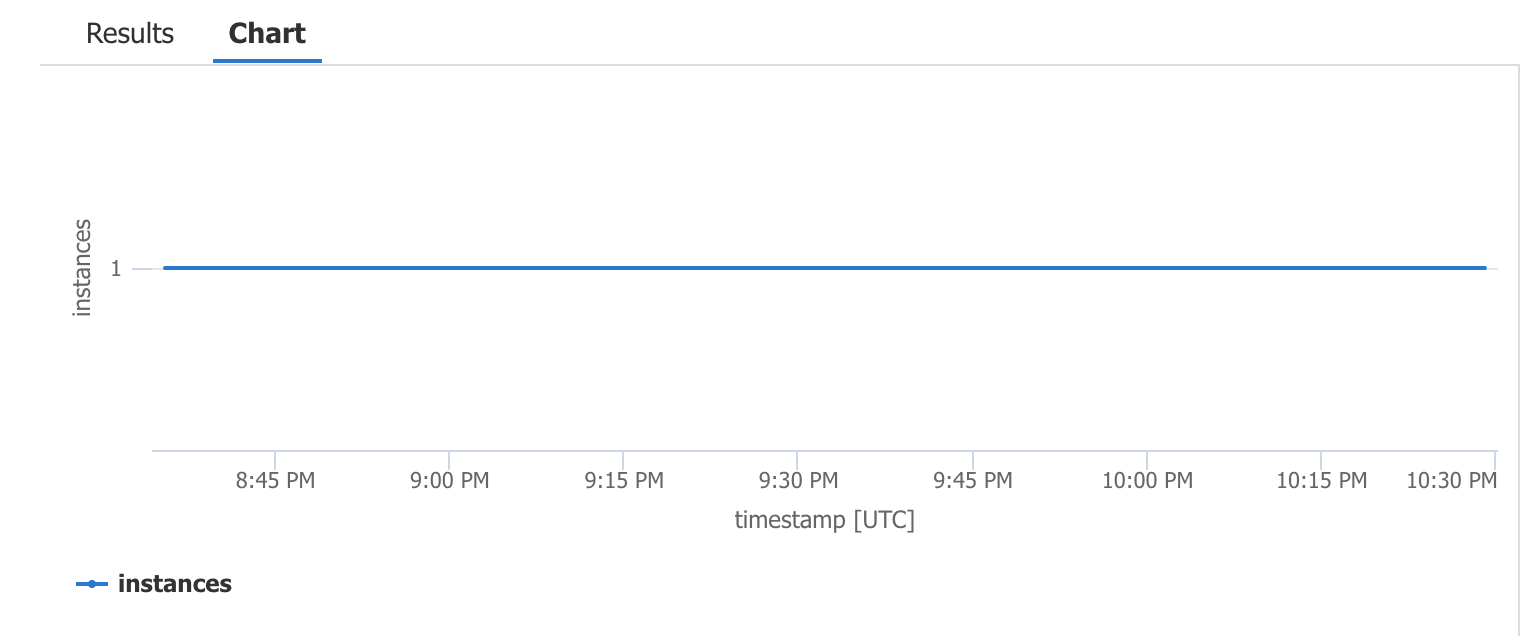

The cache hit ratio achieved by front-door was about 70%, which surprised me, as I expected it to be higher. For this second run the average response time fell to 90-120ms for cached results and the functions instances scaled to 1 instance, with the number of requests tracking around 30% or of the number of executions overall.

The costs for this solution were roughly 50% of the original cost. However, as the majority of the front-door cost is fixed, there would be an inflexion point when the cost of compute (at a much lower request per second rate) would become cheaper than the cost of the front-door. This is why Azure architecture is more than an art than a science!

| Azure SKU | Cost |

|---|---|

| Front Door | £32.31 |

| Static Web App | £7.77 |

| Azure Functions | £39.89 |

| Bandwidth | £1.73 |

| Storage | £10.30 |

| Total | £91.99 |

What did I learn?

This exercise was a great learning experience. It proved to me that serverless approaches are both simpler in terms of the developer experience and can be cost-effective. Caching responses up-front made a massive difference. The best request is one you don't have to serve at all!

I was pleased with the choice of table storage in terms of speed and ease of use; if the data model and query needs can support it, it would probably be one of my first choices for data storage, along with CosmosDB, reaching for Azure SQL only if I needed it. The load testing service is effective.

On the negative side, cold starts can be a problem - using .NET functions, the response time could be up to 4 seconds; even with a pre-warmed instance, people will see slow responses occasionally as load scales up and down. There is some overhead in the Functions runtime - I would be interested to see if porting this to another language or using a container app and other framework would reduce the response time.

Finally, I would look to see if using another layer of caching at the app level would help, perhaps adding a Redis cache to store hot responses and hitting memory rather than storage. Perhaps another post will result!