An experiment with coding with Claude

27/02/2025

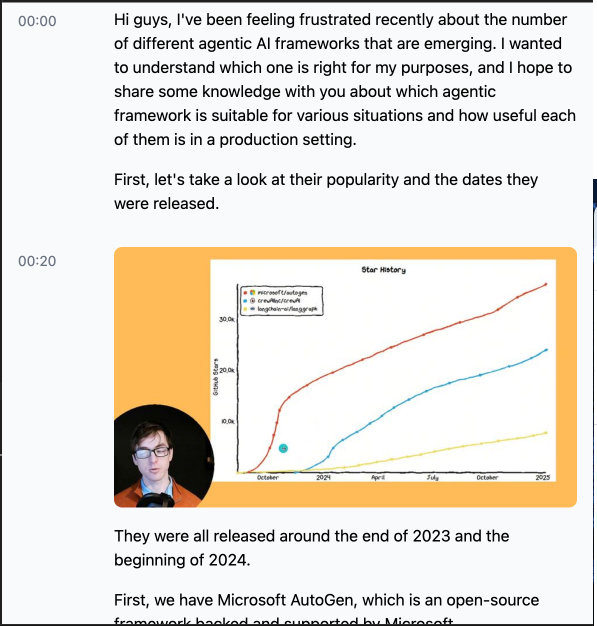

Most of my working week has been spent on talking to customers about generative AI - and I've got lots I'd like to say on that in the future.

However, a lot of the work that we're doing in that space in the day job relies on low-code/no-code tooling because in the main, our clients don't have in-house developers. In that space, AI is being a godsend because the development is largely writing prose and then creating tools, either using low-code workflows (such as Power Automate ) or bridging in to custom APIs. I've found that although the AI part is good, clients still struggle with the logic required for data transformation and integration with other systems, and also the practical side of dealing with other systems - handling failure, retries and that sort of thing, i.e. the parts that most benefit from technical experience.

I'm interested in how far AI can take you in improving productivity, and So as part of my personal spare time coding I thought I'd do an experiment to see how far I could get with a small project. Claude was my assistant during the project.

Note: this experiement was done before the release of Sonnet 3.7 - I'll update once I've really had a chance to kick the tyres!

The idea

The idea was to generate a good readable version of an online video - with a twist of my own. This was something that I'd wanted to do for a while to help me catch up with conferences, but also in general as so much good content is now available in video only format. I'm sure there are services that already do something similar, but I had a few specific requirements of my own. These were:

- I wanted to make sure I could run it locally and consume the content offline (sadly although 5G is coming to the Tube, it's not yet everywhere)

- I wanted to make sure that the level of detail in the transcript was retained, but the writing was legible and grammatically correct, so I wasn't interested in just getting a transcript.

- I wanted to make sure that any sigificant visuals were captured so I can see any diagrams, slides etc in context.

- I wanted to be able to get to the point in the video if needed to confirm what was said

This felt specific enough that it would be something I could knock up relatively easily but was specific enough that I wouldn't find something identical (although I'd be interested to hear of any!)

The experiment

I set myself the following boundaries:

- As much as possible had to be done via the AI and not myself. Using me as feedback and error checking was fine, but I wanted not to write much code myself.

- I wanted the AI to guide me as much as possible, so I tried not to suggest solutions but asked it for possible approaches before getting it to write some code.

- Claude would be the main AI used so I wanted to use as much of its facilities as possible (e.g. Projects)

- I wanted it in Python for a couple of reasons - as a personal project I was happy to forge my own path and I knew that I'd probably be using AI libraries, so I went where the ecosystem was strongest.

What I did

I set up a project in Claude (my current coding AI of choice) and instructed it to create a python application using local libraries rather than remote APIs. I then (over the course of a couple of commutes to work), instructed it to create a few different scripts to:

- Download the video

- Look for parts of the video where the screen didn't change significantly for a defined time limit (it used OpenCV for this)

- Transcribe the video (it chose Faster Whisper)

- Use a LLM to clean up the transcript (in the end we got to llama.cpp)

- Generate a webpage (I had to prompt it here to use Jinja templates rather than other approaches)

Once I'd got something working I asked it to write a driver script to call the other scripts by importing them and calling them in order. At each stage of the process I was adding the files to the project knowlege, and also, after a bit, pruning old ones on a regular basis.

Outcome

It worked (eventually)! See the screenshot below:

Issues

Unfortunately, it wasn't all plain sailing. I encountered several issues, including:

- The LLM really had to be prompted hard to get off its default path - for example, asking it to change the method of transcription to another library (but keeping the same interface) failed more often than not. Sometimes a chat outside the project got better results.

- When it was making significant changes, fixes that were part of the files in the project knowledge would get lost. For example, changing the data to be rendered would go back to the original styling it decided on, rather than using the styling already present in the file in the project. This was quite frustrating - and though Claude is very pleasant, I did find myself getting a bit shirty at times! "You've lost the fixes we did to the styling, can you restore them please??"

- When it was revising files occasionally it would start duplicating content and get very confused. Starting a new chat generally fixed this.

- Imports were often a problem - it would try to use modules that it hadn't imported. As Claude doesn't yet have python interpretation built in, it wouldn't catch this; this probably wouldn't have been a problem with ChatGPT, for example.

- Style was all over the place - sometimes things were done as classes, sometimes as dataclasses, sometimes as standalone functions. Adding this info into the project instructions at the start would have helped, most probably - something to try on the next one.

- It was very bad at async - trying to get it to parallelise work often lead to poorer performance (which I normally tracked down to bad use of the library or not chunking text correctly). I ended up reverting most of the scripts to run sequentially. I wasn't altogether surprised about this though, human programmers also find concurrency difficult! I'd also seen the same issues on other work I'd been doing.

I'd say the above would likely apply to using most AI. There was also some things that were Claude specific:

- The context length was an issue - starting new chats very frequently was necessary and files had to be kept small to avoid running into output length issues. No bad thing perhaps, it lead to more modular code.

- If I wasn't disciplined I'd run into Claude rate limits, which meant either using the poorer Haiku model, or stopping work.

- The times I used Haiku, the code generation quality went down and errors and bad code that wouldn't compile increased. Sonnet is so much better than Haiku that I was surprised given results I've had with smaller Llama models.

Conclusion

All in all, I'd class this experiment as a success. At the end of it, I had a working piece of software, and I suspect I would have run out of time and capacity to finish the project without the AI helping. Being able to noodle away at it on my phone was surprisingly helpful to get little bits drafted and then integrated when back on a more powerful machine. My next steps would be to get Claude to refactor the code a bit and make it more consistent, and then likely extend it into a full web application. As Sonnet 3.7 and Claude Code was released recently I'm going to see how they perform, and possibly do a comparison with the experience of using Github Copilot as well.